One of my primary research projects focuses on the development of approximate Markov chain Monte Carlo (MCMC) methods for Bayesian deep learning. Such methods are motivated by the problem of quantifying the uncertainty of predictions made by Bayesian neural networks.

Several challenges arise from sampling the parameter posterior of a neural network via MCMC, culminating to lack of convergence to the parameter posterior. Despite the lack of convergence, the approximate predictive posterior distribution contains valuable information (Papamarkou et al, 2022).

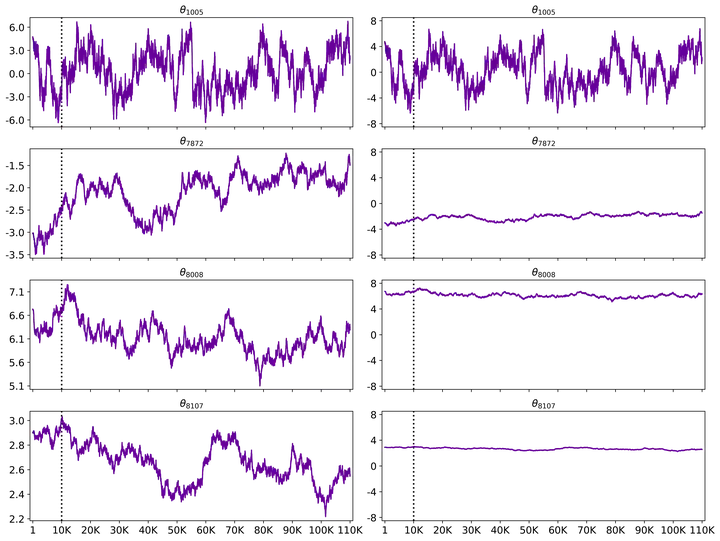

One step towards scaling MCMC methods to sample neural network parameters is based on evaluating the target density of a neural network on a subset (minibatch) of the data. By analogy to sampling data batches from a big dataset, I propose to sample subgroups of parameters from the neural network parameter space (Papamarkou, 2022). While minibatch MCMC induces an approximation to the target density, parameter subgrouping can be carried out via blocked Gibbs sampling without introducing an additional approximation.

Future computational and theoretical work emerges from the proposal to sample each parameter subgroup separately. Details about relevant future work can be found in Section 6 of Papamarkou (2022).